Initial commit - exported from wordpress

This commit is contained in:

42

content/post/2023-05-21-hilger-grading-portal.md

Normal file

42

content/post/2023-05-21-hilger-grading-portal.md

Normal file

@ -0,0 +1,42 @@

|

||||

---

|

||||

author: mikeconrad

|

||||

categories:

|

||||

- Software Engineering

|

||||

date: "2023-05-21T16:07:50Z"

|

||||

image: /wp-content/uploads/2024/03/hilger-portal-home.webp

|

||||

tags:

|

||||

- Portfolio

|

||||

title: Hilger Grading Portal

|

||||

|

||||

---

|

||||

|

||||

Back around 2014 I took on my first freelance development project for a Homeschool Co-op here in Chattanooga called [Hilger Higher Learning](https://www.hhlearning.com/). The problem that they were trying to solve involved managing grades and report cards for their students. In the past, they had a developer build a rudimentary web application that would allow them to enter grades for students, however it lacked any sort of concurrency meaning that if two teachers were making changes to the same student at the same time, teacher b’s changes would overwrite teacher a’s changes. This was obviously a huge headache.

|

||||

|

||||

I built out the first version of the app using PHP and HTML, CSS and Datatables with lots of jQuery sprinkled in. I built in custom functionality that allowed them to easily compile and print all the report cards for all students with the simple click of a button. It was a game changer or them and streamlined the process significantly.

|

||||

|

||||

That system was in production for 5 years or so with minimal updates and maintance. I recently rebuilt it using React and ChakraUI on the frontend and [KeystoneJS](https://keystonejs.com/) on the backend. I also modernized the deployment by building Docker images for the frontend/backend. I actually ended up keeping parts of it in PHP due to the fact that I couldn’t find a JavaScript library that would solve the challenges I had. Here are some screenshots of it in action:

|

||||

|

||||

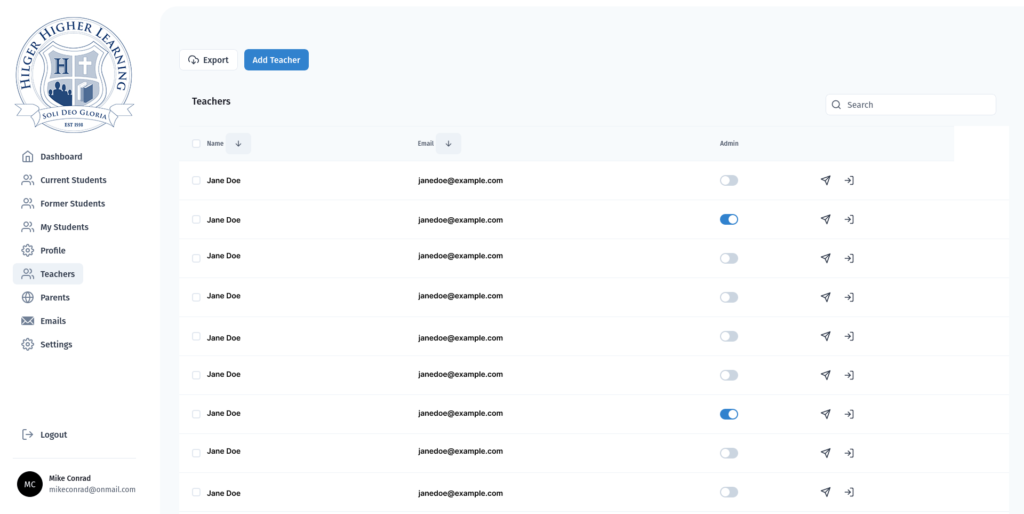

This is the page listing all teachers in the system and whether or not they have admin privileges. Any admin user can grant other admin users this privilege. There is also a button to send the teacher a password reset email (via Postmark API integration) and an option that allows admin users to impersonate other users for troubleshooting and diagnostic purposes.

|

||||

|

||||

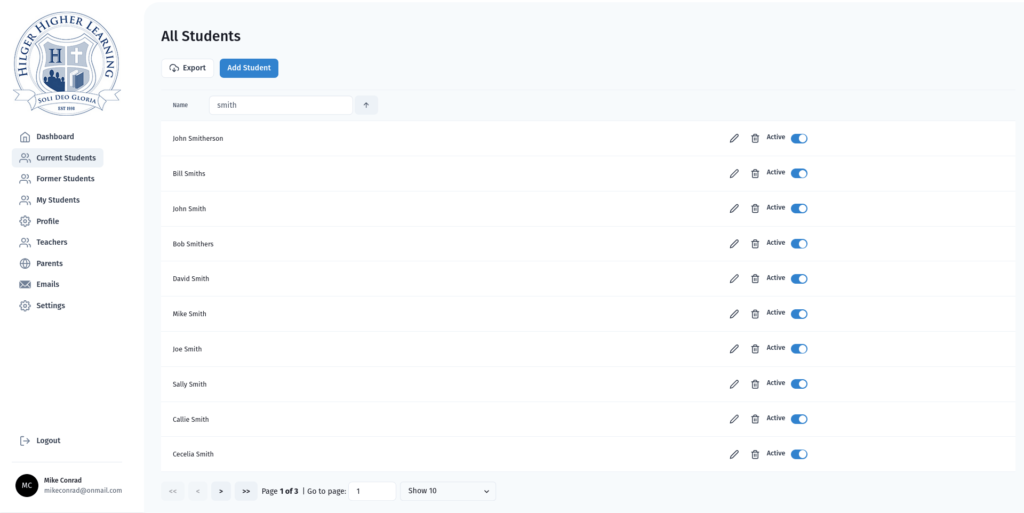

<figure class="wp-block-image size-large"></figure>The data is all coming from the KeystoneJS backend GraphQL API. I am using [urql](https://www.npmjs.com/package/urql) for fetching the data and handling mutations. This is the page that displays students. It is filterable and searchable. Teachers also have the ability to mark a student as active or inactive for the semester as well as delete them from the system.

|

||||

|

||||

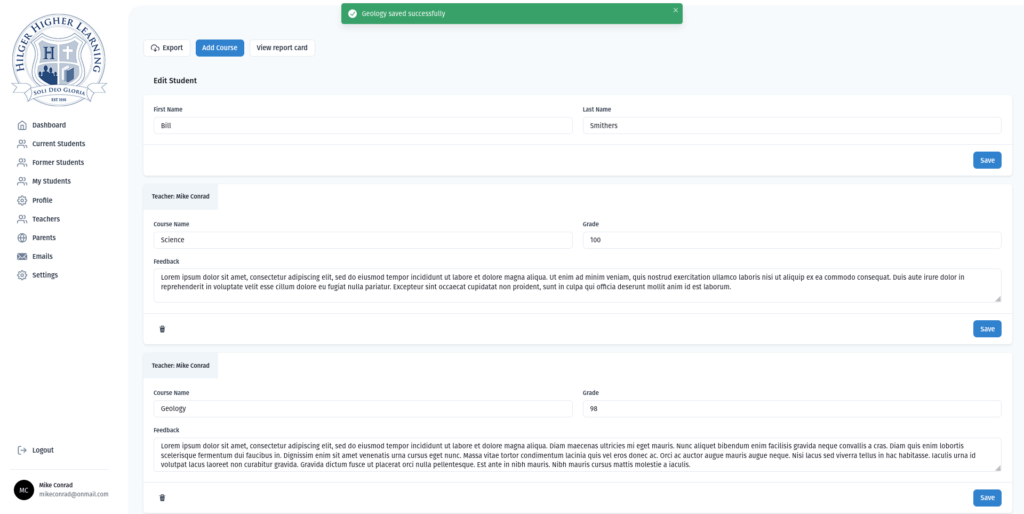

<figure class="wp-block-image size-large"></figure>Clicking on a student takes the teacher/admin to an edit course screen where they can add and remove courses for each student. A teacher can add as many courses as they need. If multiple teachers have added courses for this student, the user will only see the courses they have entered.

|

||||

|

||||

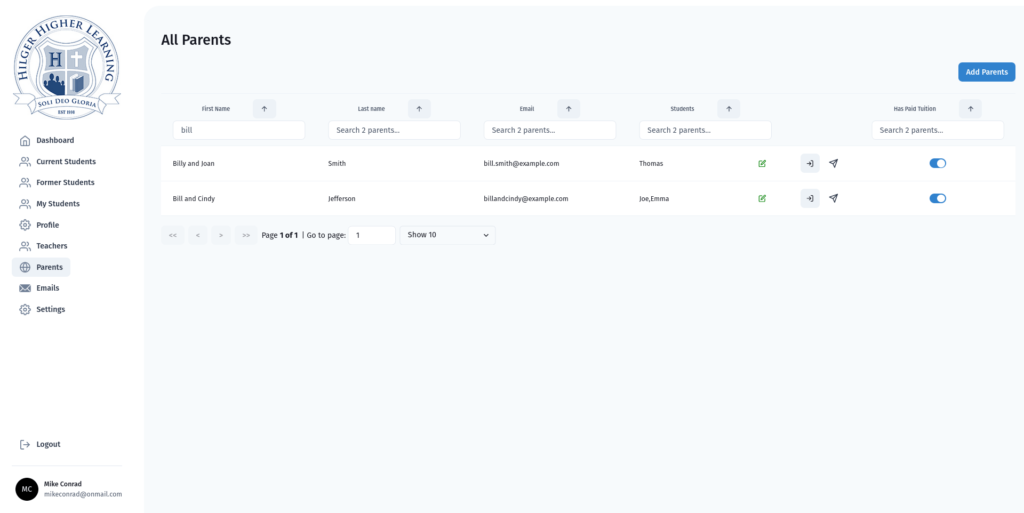

<figure class="wp-block-image size-large"></figure>There is another page that allows admin users to view and manage all of the parents in the system. It allows them to easily send a password reset email to the parents as well as to view the parent portal.

|

||||

|

||||

<figure class="wp-block-image size-large"></figure>---

|

||||

|

||||

## Technologies used

|

||||

|

||||

- Digital Ocean Droplet (Server) – Ubuntu Server

|

||||

- Docker (Frontend, Backend, PHP, Postgresql database)

|

||||

- Git

|

||||

- NodeJS

|

||||

- PHP

|

||||

- ChakraUI

|

||||

- KeystoneJS

|

||||

- Postmark

|

||||

- GraphQL

|

||||

- Typescript

|

||||

- React

|

||||

- urql

|

||||

33

content/post/2023-07-12-hoots-wings.md

Normal file

33

content/post/2023-07-12-hoots-wings.md

Normal file

@ -0,0 +1,33 @@

|

||||

---

|

||||

author: mikeconrad

|

||||

categories:

|

||||

- Software Engineering

|

||||

date: "2023-07-12T11:32:44Z"

|

||||

image: /wp-content/uploads/2024/03/hoots-locations-min.webp

|

||||

tags:

|

||||

- Portfolio

|

||||

title: Hoots Wings

|

||||

|

||||

---

|

||||

|

||||

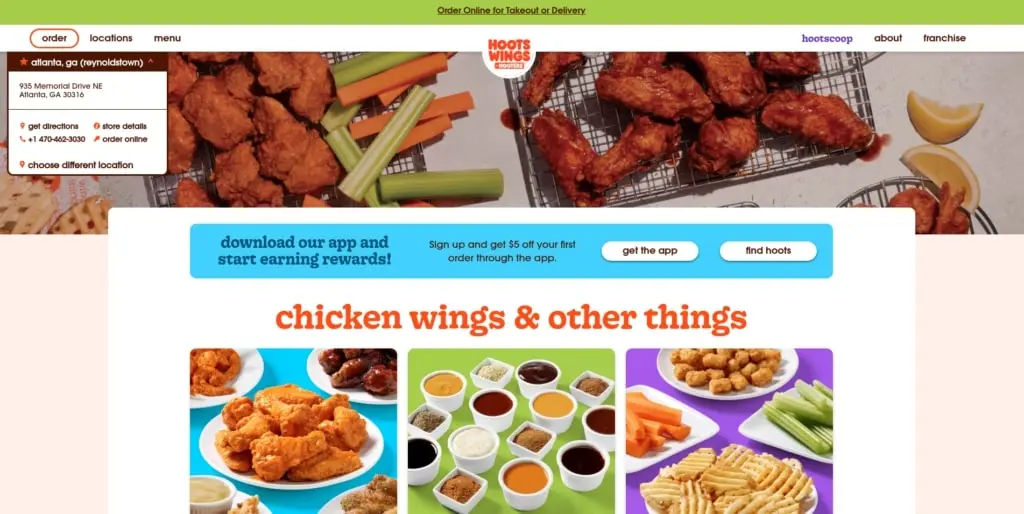

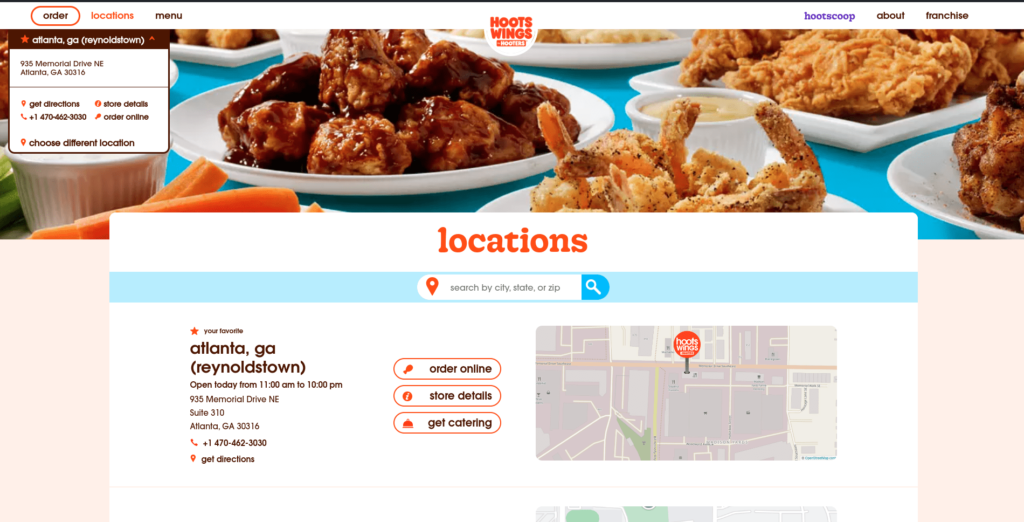

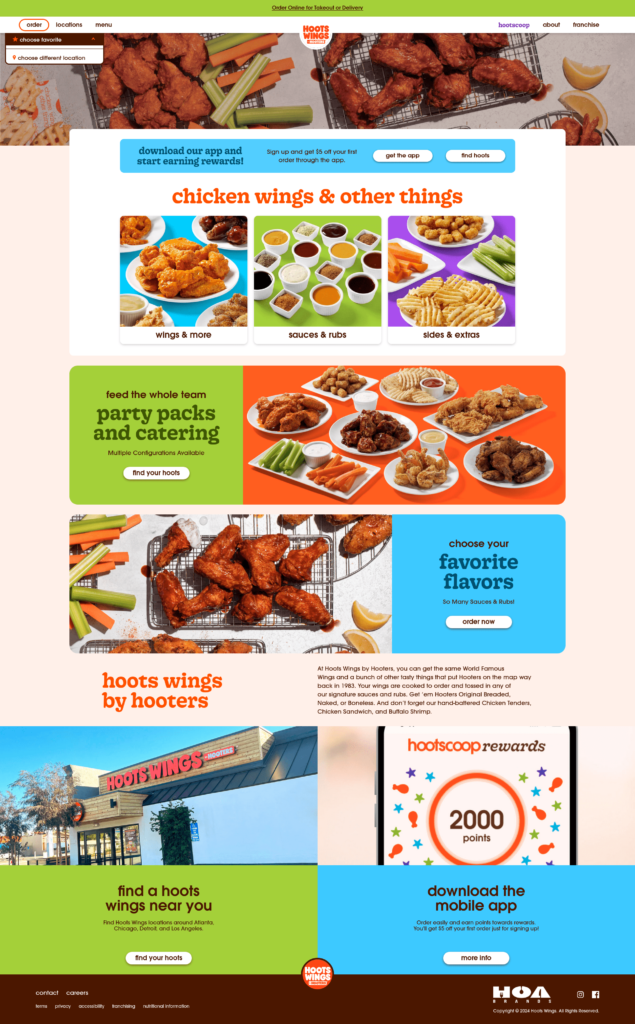

While working for [Morrison](https://morrison.agency/) I had the pleasure of building a website for [Hoots Wings.](https://hootswings.com) The CMS was [Perch](https://grabaperch.com/) and it was mostly HTML, CSS, PHP and JavaScript on the frontend, however I built out a customer store locator using NodeJS and VueJS.

|

||||

|

||||

<figure class="wp-block-image size-full"></figure>I was the sole frontend developer responsible for taking the designs from SketchUp and translating them to the site you see now. Most of the blocks and templates are built using a mix of PHP and HTML/SCSS. There was also some JavaScript for things like getting the users location and rendering popups/modals.

|

||||

|

||||

The store locator was a separate piece that was built in Vue2.0 with a NodeJS backend. For the backend I used [KeystoneJS](https://keystonejs.com/) to hold all of the store information. There was also some custom development that was done in order to sync the stores added via the CMS with [Yext](https://www.yext.com/) and vice versa.

|

||||

|

||||

<figure class="wp-block-image size-large"></figure>For that piece I ended up having to write a custom integration in Perch that would connect to the NodeJS backend and pull the stores but also make sure that those were in sync with Yext. This required diving into the Yext API some and examining a similar integration that we had for another client site.

|

||||

|

||||

Unfortunately I don’t have any screen grabs of the admin side of things since that is proprietary but the system I built allowed a site admin to go in and add/edit store locations that would show up on the site and also show up in Yext with the appropriate information.

|

||||

|

||||

#### Screenshots

|

||||

|

||||

Here are some full screenshots of the site.

|

||||

|

||||

Homepage

|

||||

|

||||

<figure class="wp-block-image size-large"></figure>Menu Page

|

||||

|

||||

<figure class="wp-block-image size-large"></figure>Locations Page

|

||||

|

||||

<figure class="wp-block-image size-large"></figure>

|

||||

@ -0,0 +1,166 @@

|

||||

---

|

||||

author: mikeconrad

|

||||

categories:

|

||||

- Software Engineering

|

||||

date: "2024-01-03T19:59:49Z"

|

||||

tags:

|

||||

- Blog Post

|

||||

- GraphQL

|

||||

- KeystoneJS

|

||||

- NodeJS

|

||||

- Prisma

|

||||

- TypeScript

|

||||

title: Roll your own authenticator app with KeystoneJS and React

|

||||

---

|

||||

|

||||

In this series of articles we are going to be building an authenticator app using KeystoneJS for the backend and React for the frontend. The concept is pretty simple and yes there are a bunch out there already but I recently had a need to learn some of the ins and outs of TOTP tokens and thought this project would be a fun idea. Let’s get started.

|

||||

|

||||

##### Step 1: Init keystone app

|

||||

|

||||

Open up a terminal and create a blank keystone project. We are going to call our app authenticator to keep things simple.

|

||||

|

||||

```

|

||||

$ yarn create keystone-app

|

||||

yarn create v1.22.21

|

||||

[1/4] Resolving packages...

|

||||

[2/4] Fetching packages...

|

||||

[3/4] Linking dependencies...

|

||||

[4/4] Building fresh packages...

|

||||

|

||||

success Installed "create-keystone-app@9.0.1" with binaries:

|

||||

- create-keystone-app

|

||||

[###################################################################################################################################################################################] 273/273

|

||||

✨ You're about to generate a project using Keystone 6 packages.

|

||||

|

||||

✔ What directory should create-keystone-app generate your app into? · authenticator

|

||||

|

||||

⠸ Installing dependencies with yarn. This may take a few minutes.

|

||||

⚠ Failed to install with yarn.

|

||||

✔ Installed dependencies with npm.

|

||||

|

||||

|

||||

🎉 Keystone created a starter project in: authenticator

|

||||

|

||||

To launch your app, run:

|

||||

|

||||

- cd authenticator

|

||||

- npm run dev

|

||||

|

||||

Next steps:

|

||||

|

||||

- Read authenticator/README.md for additional getting started details.

|

||||

- Edit authenticator/keystone.ts to customize your app.

|

||||

- Open the Admin UI

|

||||

- Open the Graphql API

|

||||

- Read the docs

|

||||

- Star Keystone on GitHub

|

||||

|

||||

Done in 84.06s.

|

||||

|

||||

```

|

||||

|

||||

After a few minutes you should be ready to go. Ignore the error about yarn not being able to install dependencies, it’s an issue with my setup. Next go ahead and open up the project folder with your editor of choice. I use VSCodium:

|

||||

|

||||

```

|

||||

codium authenticator

|

||||

```

|

||||

|

||||

Let’s go ahead and remove all the comments from the `schema.ts` file and clean it up some:

|

||||

|

||||

```

|

||||

sed -i '/\/\//d' schema.ts

|

||||

```

|

||||

|

||||

Also, go ahead and delete the Post and Tag list as we won’t be using them. Our cleaned up `schema.ts` should look like this:

|

||||

|

||||

```

|

||||

// schema.ts

|

||||

import { list } from '@keystone-6/core';

|

||||

import { allowAll } from '@keystone-6/core/access';

|

||||

|

||||

import {

|

||||

text,

|

||||

relationship,

|

||||

password,

|

||||

timestamp,

|

||||

select,

|

||||

} from '@keystone-6/core/fields';

|

||||

|

||||

|

||||

import type { Lists } from '.keystone/types';

|

||||

|

||||

export const lists: Lists = {

|

||||

User: list({

|

||||

access: allowAll,

|

||||

|

||||

fields: {

|

||||

name: text({ validation: { isRequired: true } }),

|

||||

email: text({

|

||||

validation: { isRequired: true },

|

||||

isIndexed: 'unique',

|

||||

}),

|

||||

password: password({ validation: { isRequired: true } }),

|

||||

createdAt: timestamp({

|

||||

defaultValue: { kind: 'now' },

|

||||

}),

|

||||

},

|

||||

}),

|

||||

|

||||

};

|

||||

|

||||

```

|

||||

|

||||

Next we will define the schema for our tokens. We will need 3 basic things to start with:

|

||||

|

||||

- Issuer

|

||||

- Secret Key

|

||||

- Account

|

||||

|

||||

The only thing that really matters for generating a TOTP is actually the secret key. The other two fields are mostly for identifying and differentiating tokens. Go ahead and add the following to our `schema.ts` underneath the User list:

|

||||

|

||||

```

|

||||

Token: list({

|

||||

access: allowAll,

|

||||

fields: {

|

||||

secretKey: text({ validation: { isRequired: true } }),

|

||||

issuer: text({ validation: { isRequired: true }}),

|

||||

account: text({ validation: { isRequired: true }})

|

||||

}

|

||||

}),

|

||||

```

|

||||

|

||||

Now that we have defined our Token, we should probably link it to a user. KeystoneJS makes this really easily. We simply need to add a relationship field to our User list. Add the following field to the user list:

|

||||

|

||||

```

|

||||

tokens: relationship({ ref:'Token', many: true })

|

||||

```

|

||||

|

||||

We are defining a tokens field on the User list and tying it to our Token list. We are also passing `many: true` saying that a user can have one or more tokens. Now that we have the basics set up, let’s go ahead and spin up our app and see what we have:

|

||||

|

||||

```

|

||||

$ yarn dev

|

||||

yarn run v1.22.21

|

||||

$ keystone dev

|

||||

✨ Starting Keystone

|

||||

⭐️ Server listening on :3000 (http://localhost:3000/)

|

||||

⭐️ GraphQL API available at /api/graphql

|

||||

✨ Generating GraphQL and Prisma schemas

|

||||

✨ The database is already in sync with the Prisma schema

|

||||

✨ Connecting to the database

|

||||

✨ Creating server

|

||||

✅ GraphQL API ready

|

||||

✨ Generating Admin UI code

|

||||

✨ Preparing Admin UI app

|

||||

✅ Admin UI ready

|

||||

|

||||

```

|

||||

|

||||

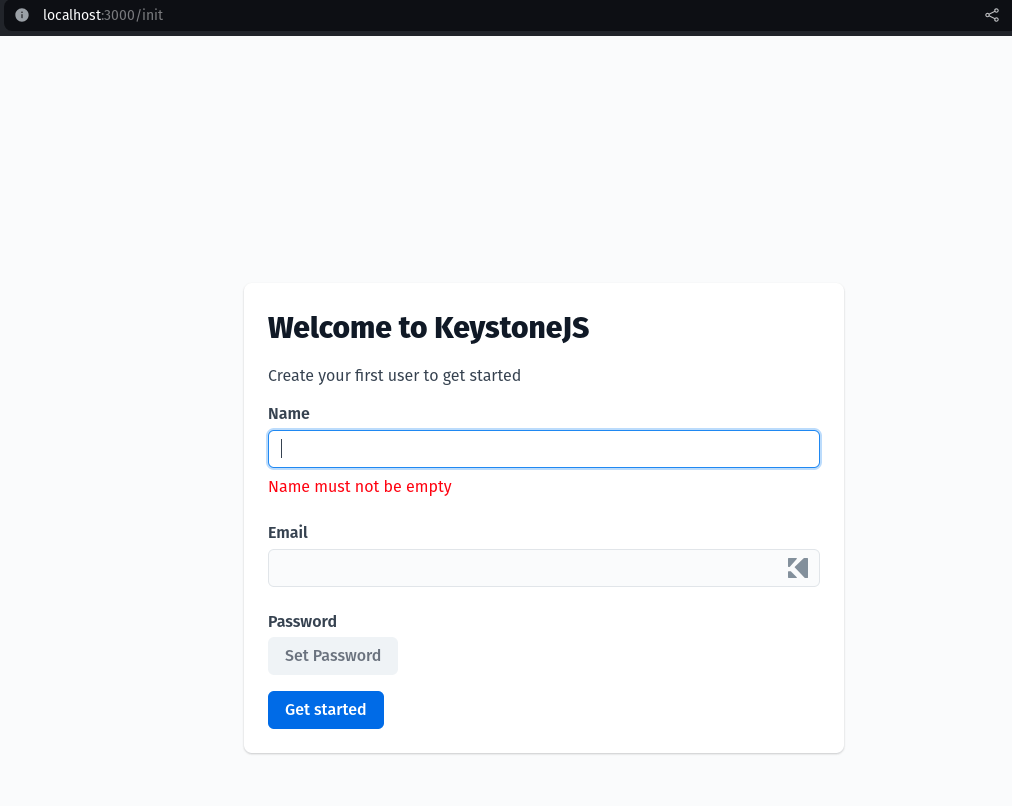

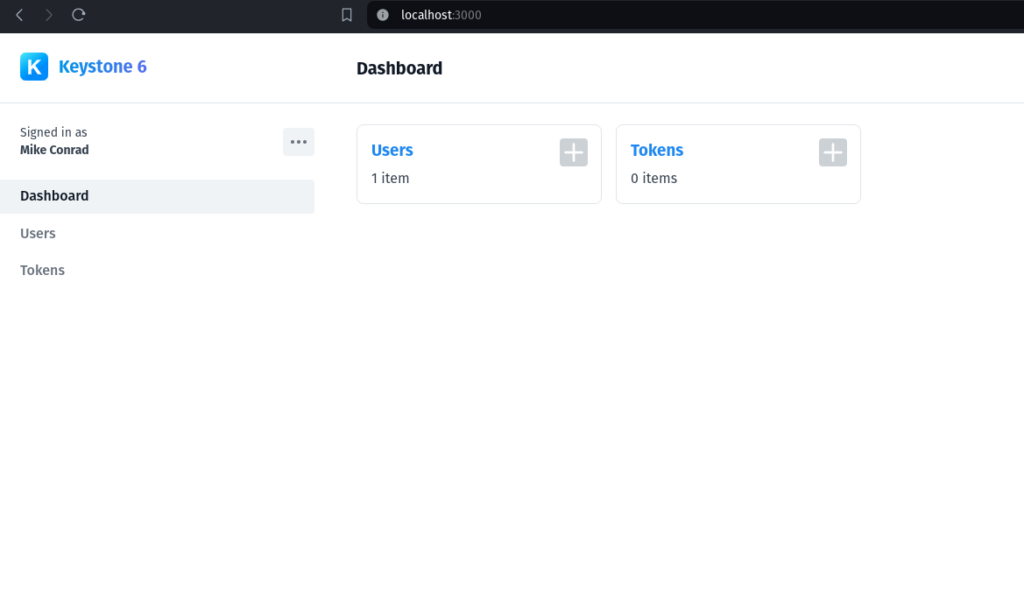

Our server should be running on localhost:3000 so let’s check it out! The first time we open it up we will be greeted with the initialization screen. Go ahead and create an account to login:

|

||||

|

||||

<figure class="wp-block-image size-full"></figure>Once you login you should see a dashboard similar to this:

|

||||

|

||||

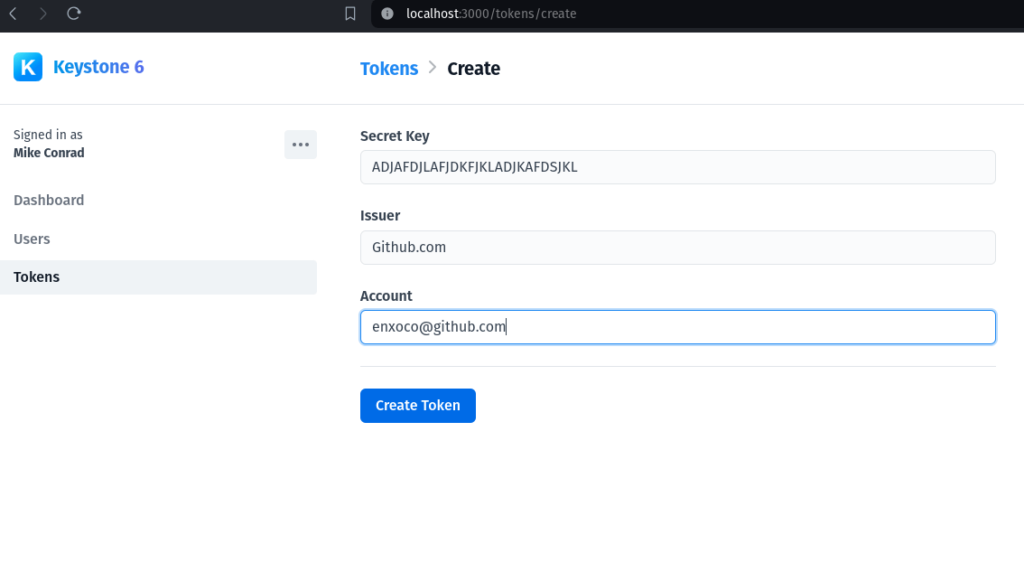

<figure class="wp-block-image size-large"></figure>You can see we have Users and Tokens that we can manage. The beauty of KeystoneJS is that you get full CRUD functionality out of the box just by defining our schema! Go ahead and click on Tokens to add a token:

|

||||

|

||||

<figure class="wp-block-image size-large"></figure>For this example I just entered some random text as an example. This is enough to start testing out our TOTP functionality. Click ‘Create Token’ and you should see a list displaying existing tokens:

|

||||

|

||||

<figure class="wp-block-image size-large"></figure>We are now ready to jump into the frontend. Stay tuned for pt 2 of this series.

|

||||

@ -0,0 +1,246 @@

|

||||

---

|

||||

author: mikeconrad

|

||||

categories:

|

||||

- Software Engineering

|

||||

date: "2024-01-10T20:41:00Z"

|

||||

guid: https://hackanooga.com/?p=539

|

||||

id: 539

|

||||

tags:

|

||||

- Blog Post

|

||||

- GraphQL

|

||||

- KeystoneJS

|

||||

- NodeJS

|

||||

- Prisma

|

||||

- TypeScript

|

||||

title: Roll your own authenticator app with KeystoneJS and React - pt 2

|

||||

url: /roll-your-own-authenticator-app-with-keystonejs-and-react-pt-2/

|

||||

---

|

||||

|

||||

In part 1 of this series we built out a basic backend using KeystoneJS. In this part we will go ahead and start a new React frontend that will interact with our backend. We will be using Vite. Let’s get started. Make sure you are in the `authenticator` folder and run the following:

|

||||

|

||||

```

|

||||

$ yarn create vite@latest

|

||||

yarn create v1.22.21

|

||||

[1/4] Resolving packages...

|

||||

[2/4] Fetching packages...

|

||||

[3/4] Linking dependencies...

|

||||

[4/4] Building fresh packages...

|

||||

|

||||

success Installed "create-vite@5.2.2" with binaries:

|

||||

- create-vite

|

||||

- cva

|

||||

✔ Project name: … frontend

|

||||

✔ Select a framework: › React

|

||||

✔ Select a variant: › TypeScript

|

||||

|

||||

Scaffolding project in /home/mikeconrad/projects/authenticator/frontend...

|

||||

|

||||

Done. Now run:

|

||||

|

||||

cd frontend

|

||||

yarn

|

||||

yarn dev

|

||||

|

||||

Done in 10.20s.

|

||||

```

|

||||

|

||||

Let’s go ahead and go into our frontend directory and get started:

|

||||

|

||||

```

|

||||

$ cd frontend

|

||||

$ yarn

|

||||

yarn install v1.22.21

|

||||

info No lockfile found.

|

||||

[1/4] Resolving packages...

|

||||

[2/4] Fetching packages...

|

||||

[3/4] Linking dependencies...

|

||||

[4/4] Building fresh packages...

|

||||

success Saved lockfile.

|

||||

Done in 10.21s.

|

||||

|

||||

$ yarn dev

|

||||

yarn run v1.22.21

|

||||

$ vite

|

||||

Port 5173 is in use, trying another one...

|

||||

|

||||

VITE v5.1.6 ready in 218 ms

|

||||

|

||||

➜ Local: http://localhost:5174/

|

||||

➜ Network: use --host to expose

|

||||

➜ press h + enter to show help

|

||||

|

||||

```

|

||||

|

||||

Next go ahead and open the project up in your IDE of choice. I prefer [VSCodium](https://vscodium.com/):

|

||||

|

||||

```

|

||||

codium frontend

|

||||

```

|

||||

|

||||

Go ahead and open up `src/App.tsx` and remove all the boilerplate so it looks like this:

|

||||

|

||||

```

|

||||

import './App.css'

|

||||

|

||||

function App() {

|

||||

|

||||

return (

|

||||

<>

|

||||

</>

|

||||

)

|

||||

}

|

||||

|

||||

export default App

|

||||

```

|

||||

|

||||

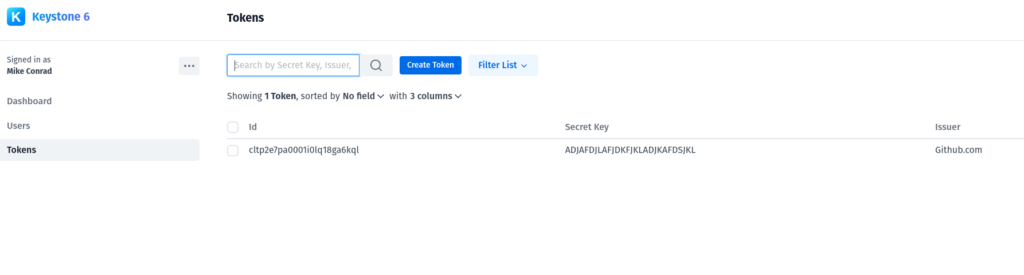

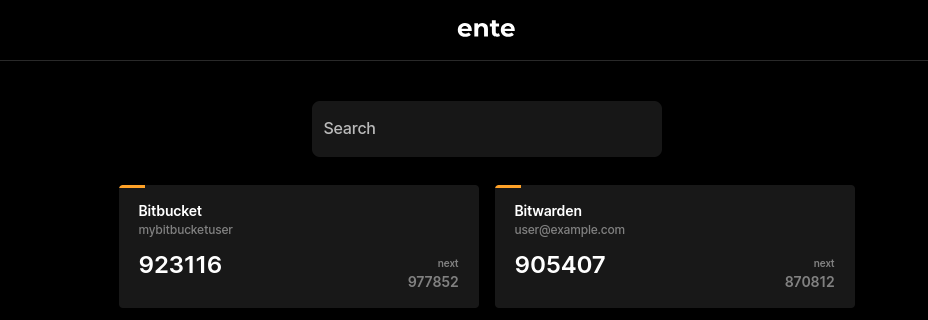

Let’s start by building a card component that will display an individual token. Our goal is something that looks like this:

|

||||

|

||||

<figure class="wp-block-image size-full"></figure>We will start by creating a Components folder with a Card component:

|

||||

|

||||

```

|

||||

$ mkdir src/Components

|

||||

$ touch src/Components/Card.tsx

|

||||

```

|

||||

|

||||

Let’s go ahead and make a couple updates, we will create this simple card component, add some dummy tokens and some basic styling.

|

||||

|

||||

```

|

||||

# src/App.tsx

|

||||

|

||||

import './App.css'

|

||||

import Card from './Components/Card';

|

||||

export interface IToken {

|

||||

account: string;

|

||||

issuer: string;

|

||||

token: string;

|

||||

}

|

||||

function App() {

|

||||

|

||||

const tokens: IToken[] = [

|

||||

{

|

||||

account: 'enxoco@github.com',

|

||||

issuer: 'Github',

|

||||

token: 'AJFDLDAJKFK'

|

||||

},

|

||||

{

|

||||

account: 'mikeconrad@example.com',

|

||||

issuer: 'Example.com',

|

||||

token: 'KAJLFDJLKAFD'

|

||||

}

|

||||

]

|

||||

return (

|

||||

<>

|

||||

<div className='cardWrapper'>

|

||||

{tokens.map(token => <Card token={token} />)}

|

||||

</div>

|

||||

</>

|

||||

)

|

||||

}

|

||||

|

||||

export default App

|

||||

|

||||

```

|

||||

|

||||

```

|

||||

# src/Components/Card.tsx

|

||||

import { IToken } from "../App"

|

||||

|

||||

function Card({ token }: { token: IToken }) {

|

||||

return (

|

||||

<>

|

||||

<div className='card'>

|

||||

<span>{token.issuer}</span>

|

||||

<span>{token.account}</span>

|

||||

<span>{token.token}</span>

|

||||

</div>

|

||||

</>

|

||||

|

||||

)

|

||||

}

|

||||

export default Card

|

||||

```

|

||||

|

||||

```

|

||||

# src/index.css

|

||||

:root {

|

||||

font-family: Inter, system-ui, Avenir, Helvetica, Arial, sans-serif;

|

||||

line-height: 1.5;

|

||||

font-weight: 400;

|

||||

|

||||

color-scheme: light dark;

|

||||

color: rgba(255, 255, 255, 0.87);

|

||||

|

||||

font-synthesis: none;

|

||||

text-rendering: optimizeLegibility;

|

||||

-webkit-font-smoothing: antialiased;

|

||||

-moz-osx-font-smoothing: grayscale;

|

||||

}

|

||||

|

||||

a {

|

||||

font-weight: 500;

|

||||

color: #646cff;

|

||||

text-decoration: inherit;

|

||||

}

|

||||

a:hover {

|

||||

color: #535bf2;

|

||||

}

|

||||

|

||||

body {

|

||||

margin: 0;

|

||||

display: flex;

|

||||

place-items: center;

|

||||

min-width: 320px;

|

||||

min-height: 100vh;

|

||||

background-color: #2c2c2c;

|

||||

|

||||

}

|

||||

|

||||

.cardWrapper {

|

||||

display: flex;

|

||||

}

|

||||

|

||||

.card {

|

||||

padding: 2em;

|

||||

min-width: 250px;

|

||||

border: 1px solid;

|

||||

margin: 10px;

|

||||

background-color: #333333;

|

||||

display: flex;

|

||||

flex-direction: column;

|

||||

align-items: baseline;

|

||||

}

|

||||

```

|

||||

|

||||

Now you should have something that looks like this:

|

||||

|

||||

<figure class="wp-block-image size-full"></figure>Alright, we have some of the boring stuff out of the way, now let’s start making some magic. If you aren’t familiar with how TOTP tokens work, basically there is an Algorithm that generates them. I would encourage you to read the [RFC](https://datatracker.ietf.org/doc/html/rfc6238) for a detailed explanation. Basically it is an algorithm that generates a one time password using the current time as a source of uniqueness along with the secret key.

|

||||

|

||||

If we really wanted to we could implement this algorithm ourselves but thankfully there are some really simple libraries that do it for us. For our project we will be using one called `<a href="https://github.com/bellstrand/totp-generator">totp-generator</a>`. Let’s go ahead and install it and check it out:

|

||||

|

||||

```

|

||||

$ yarn add totp-generator

|

||||

```

|

||||

|

||||

Now let’s add it to our card component and see what happens. Using it is really simple. We just need to import it, instantiate a new `TokenGenerator` and pass it our Secret key:

|

||||

|

||||

```

|

||||

# src/Components/card.tsx

|

||||

import { TOTP } from 'totp-generator';

|

||||

---

|

||||

function Card({ token }: { token: IToken }) {

|

||||

const { otp, expires } = TOTP.generate(token.token)

|

||||

return (

|

||||

<>

|

||||

<div className='card'>

|

||||

<span>{token.issuer}</span>

|

||||

<span>{token.account}</span>

|

||||

<span>{otp} - {expires}</span>

|

||||

</div>

|

||||

</>

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

Now save and go back to your browser and you should see that our secret keys are now being displayed as tokens:

|

||||

|

||||

<figure class="wp-block-image size-full"></figure>That is pretty cool, the only problem is you need to refresh the page to refresh the token. We will take care of that in part 3 of this series as well as handling fetching tokens from our backend.

|

||||

@ -0,0 +1,157 @@

|

||||

---

|

||||

author: mikeconrad

|

||||

categories:

|

||||

- Software Engineering

|

||||

date: "2024-01-17T12:11:00Z"

|

||||

enclosure:

|

||||

- |

|

||||

https://hackanooga.com/wp-content/uploads/2024/03/otp-countdown.mp4

|

||||

29730

|

||||

video/mp4

|

||||

guid: https://hackanooga.com/?p=546

|

||||

id: 546

|

||||

tags:

|

||||

- Authentication

|

||||

- Blog Post

|

||||

- React

|

||||

- TypeScript

|

||||

title: Roll your own authenticator app with KeystoneJS and React - pt 3

|

||||

url: /roll-your-own-authenticator-app-with-keystonejs-and-react-pt-3/

|

||||

---

|

||||

|

||||

In our previous post we got to the point of displaying an OTP in our card component. Now it is time to refactor a bit and implement a countdown functionality to see when this token will expire. For now we will go ahead and add this logic into our Card component. In order to figure out how to build this countdown timer we first need to understand how the TOTP counter is calculated.

|

||||

|

||||

In other words, we know that at TOTP token is derived from a secret key and the current time. If we dig into the spec some we can find that time is a reference to Linux epoch time or the number of seconds that have elapsed since January 1st 1970. For a little more clarification check out this Stackexchange [article](https://crypto.stackexchange.com/questions/72558/what-time-is-used-in-a-totp-counter).

|

||||

|

||||

So if we know that the time is based on epoch time, we also need to know that most TOTP tokens have a validity period of either 30 seconds or 60 seconds. 30 seconds is the most common standard so we will use that for our implementation. If we put all that together then basically all we need is 2 variables:

|

||||

|

||||

1. Number of seconds since epoch

|

||||

2. How many seconds until this token expires

|

||||

|

||||

The first one is easy:

|

||||

|

||||

```

|

||||

let secondsSinceEpoch;

|

||||

secondsSinceEpoch = Math.ceil(Date.now() / 1000) - 1;

|

||||

|

||||

# This gives us a time like so: 1710338609

|

||||

```

|

||||

|

||||

For the second one we will need to do a little math but it’s pretty straightforward. We need to divide `secondsSinceEpoch` by 30 seconds and then subtract this number from 30. Here is what that looks like:

|

||||

|

||||

```

|

||||

let secondsSinceEpoch;

|

||||

let secondsRemaining;

|

||||

const period = 30;

|

||||

|

||||

secondsSinceEpoch = Math.ceil(Date.now() / 1000) - 1;

|

||||

secondsRemaining = period - (secondsSinceEpoch % period);

|

||||

```

|

||||

|

||||

Now let’s put all of that together into a function that we can test out to make sure we are getting the results we expect.

|

||||

|

||||

```

|

||||

const timer = setInterval(() => {

|

||||

countdown()

|

||||

}, 1000)

|

||||

|

||||

function countdown() {

|

||||

let secondsSinceEpoch;

|

||||

let secondsRemaining;

|

||||

|

||||

const period = 30;

|

||||

secondsSinceEpoch = Math.ceil(Date.now() / 1000) - 1;

|

||||

|

||||

secondsRemaining = period - (secondsSinceEpoch % period);

|

||||

console.log(secondsSinceEpoch, secondsRemaining)

|

||||

if (secondsRemaining == 1) {

|

||||

console.log("timer done")

|

||||

clearInterval(timer)

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

Running this function should give you output similar to the following. In this example we are stopping the timer once it hits 1 second just to show that everything is working as we expect. In our application we will want this time to keep going forever:

|

||||

|

||||

```

|

||||

1710339348, 12

|

||||

1710339349, 11

|

||||

1710339350, 10

|

||||

1710339351, 9

|

||||

1710339352, 8

|

||||

1710339353, 7

|

||||

1710339354, 6

|

||||

1710339355, 5

|

||||

1710339356, 4

|

||||

1710339357, 3

|

||||

1710339358, 2

|

||||

1710339359, 1

|

||||

"timer done"

|

||||

```

|

||||

|

||||

Here is a JSfiddle that shows it in action: https://jsfiddle.net/561vg3k7/

|

||||

|

||||

We can go ahead and add this function to our Card component and get it wired up. I am going to skip ahead a bit and add a progress bar to our card that is synced with our countdown timer and changes colors as it drops below 10 seconds. For now we will be using a `setInterval` function to accomplish this.

|

||||

|

||||

Here is what my updated `src/Components/Card.tsx` looks like:

|

||||

|

||||

```

|

||||

import { useState } from "react";

|

||||

import { IToken } from "../App"

|

||||

import { TOTP } from 'totp-generator';

|

||||

|

||||

|

||||

function Card({ token }: { token: IToken }) {

|

||||

const { otp } = TOTP.generate(token.token);

|

||||

const [timerStyle, setTimerStyle] = useState("");

|

||||

const [timerWidth, setTimerWidth] = useState("");

|

||||

|

||||

|

||||

function countdown() {

|

||||

let secondsSinceEpoch: number;

|

||||

let secondsRemaining: number = 30;

|

||||

const period = 30;

|

||||

secondsSinceEpoch = Math.ceil(Date.now() / 1000) - 1;

|

||||

secondsRemaining = period - (secondsSinceEpoch % period);

|

||||

setTimerWidth(`${100 - (100 / 30 * (30 - secondsRemaining))}%`)

|

||||

setTimerStyle(secondsRemaining < 10 ? "salmon" : "lightgreen")

|

||||

}

|

||||

setInterval(() => {

|

||||

countdown();

|

||||

}, 250);

|

||||

return (

|

||||

<>

|

||||

<div className='card'>

|

||||

<div className='progressBar'style={{ width: timerWidth, backgroundColor: timerStyle}}></div>

|

||||

<span>{token.issuer}</span>

|

||||

<span>{token.account}</span>

|

||||

<span >{otp}</span>

|

||||

</div>

|

||||

</>

|

||||

|

||||

)

|

||||

}

|

||||

export default Card

|

||||

```

|

||||

|

||||

Pretty straightforward. I also updated my `src/index.css` and added a style for our progress bar:

|

||||

|

||||

```

|

||||

.progressBar {

|

||||

height: 10px;

|

||||

position: absolute;

|

||||

top: 0;

|

||||

left: 0;

|

||||

right: inherit;

|

||||

}

|

||||

// Also be sure to add position:relative to .card which is the parent of this.

|

||||

```

|

||||

|

||||

Here is what it all looks like in action:

|

||||

|

||||

<figure class="wp-block-video"><video controls="" src="https://hackanooga.com/wp-content/uploads/2024/03/otp-countdown.mp4"></video></figure>If you look closely you will notice a few interesting things. First is that the color of the progress bar changes from green to red. This is handled by our `timerStyle` variable. That part is pretty simple, if the timer is less than 10 seconds we set the background color as salmon otherwise we use light green. The width of the progress bar is controlled by `${100 – (100 / 30 \* (30 – secondsRemaining))}%`

|

||||

|

||||

The other interesting thing to note is that when the timer runs out it automatically restarts at 30 seconds with a new OTP. This is due to the fact that this component is re-rendering every 1/4 second and every time it re-renders it is running the entire function body including: `const { otp } = TOTP.generate(token.token);`.

|

||||

|

||||

This is to be expected since we are using React and we are just using a setInterval. It may be a little unexpected though if you aren’t as familiar with React render cycles. For our purposes this will work just fine for now. Stay tuned for pt 4 of this series where we wire up the backend API.

|

||||

@ -0,0 +1,153 @@

|

||||

---

|

||||

author: mikeconrad

|

||||

categories:

|

||||

- Cloudflare

|

||||

- Docker

|

||||

- Self Hosted

|

||||

- Traefik

|

||||

dark_fusion_page_sidebar:

|

||||

- sidebar-1

|

||||

dark_fusion_site_layout:

|

||||

- ""

|

||||

date: "2024-02-01T14:35:00Z"

|

||||

guid: https://wordpress.hackanooga.com/?p=422

|

||||

id: 422

|

||||

tags:

|

||||

- Blog Post

|

||||

title: Traefik with Let’s Encrypt and Cloudflare (pt 1)

|

||||

url: /traefik-with-lets-encrypt-and-cloudflare-pt-1/

|

||||

---

|

||||

|

||||

Recently I decided to rebuild one of my homelab servers. Previously I was using Nginx as my reverse proxy but I decided to switch to Traefik since I have been using it professionally for some time now. One of the reasons I like Traefik is that it is stupid simple to set up certificates and when I am using it with Docker I don’t have to worry about a bunch of configuration files. If you aren’t familiar with how Traefik works with Docker, here is a brief example of a `docker-compose.yaml`

|

||||

|

||||

```

|

||||

version: '3'

|

||||

|

||||

services:

|

||||

reverse-proxy:

|

||||

# The official v2 Traefik docker image

|

||||

image: traefik:v2.11

|

||||

# Enables the web UI and tells Traefik to listen to docker

|

||||

command:

|

||||

- --api.insecure=true

|

||||

- --providers.docker=true

|

||||

- --entrypoints.web.address=:80

|

||||

- --entrypoints.websecure.address=:443

|

||||

# Set up LetsEncrypt

|

||||

- --certificatesresolvers.letsencrypt.acme.dnschallenge=true

|

||||

- --certificatesresolvers.letsencrypt.acme.dnschallenge.provider=cloudflare

|

||||

- --certificatesresolvers.letsencrypt.acme.email=user@example.com

|

||||

- --certificatesresolvers.letsencrypt.acme.storage=/letsencrypt/acme.json

|

||||

# Redirect all http requests to https

|

||||

- --entryPoints.web.http.redirections.entryPoint.to=websecure

|

||||

- --entryPoints.web.http.redirections.entryPoint.scheme=https

|

||||

- --entryPoints.web.http.redirections.entrypoint.permanent=true

|

||||

- --log=true

|

||||

- --log.level=INFO

|

||||

# Needed to request certs via lets encrypt

|

||||

environment:

|

||||

- CF_DNS_API_TOKEN=[redacted]

|

||||

ports:

|

||||

# The HTTP port

|

||||

- "80:80"

|

||||

- "443:443"

|

||||

# The Web UI (enabled by --api.insecure=true)

|

||||

- "8080:8080"

|

||||

volumes:

|

||||

# So that Traefik can listen to the Docker events

|

||||

- /var/run/docker.sock:/var/run/docker.sock:ro

|

||||

# Used for storing letsencrypt certificates

|

||||

- ./letsencrypt:/letsencrypt

|

||||

- ./volumes/traefik/logs:/logs

|

||||

networks:

|

||||

- traefik

|

||||

ots:

|

||||

image: luzifer/ots

|

||||

container_name: ots

|

||||

restart: always

|

||||

environment:

|

||||

REDIS_URL: redis://redis:6379/0

|

||||

SECRET_EXPIRY: "604800"

|

||||

STORAGE_TYPE: redis

|

||||

depends_on:

|

||||

- redis

|

||||

labels:

|

||||

- traefik.enable=true

|

||||

- traefik.http.routers.ots.rule=Host(`ots.example.com`)

|

||||

- traefik.http.routers.ots.entrypoints=websecure

|

||||

- traefik.http.routers.ots.tls=true

|

||||

- traefik.http.routers.ots.tls.certresolver=letsencrypt

|

||||

- traefik.http.services.ots.loadbalancer.server.port=3000

|

||||

networks:

|

||||

- traefik

|

||||

redis:

|

||||

image: redis:alpine

|

||||

restart: always

|

||||

volumes:

|

||||

- ./redis-data:/data

|

||||

networks:

|

||||

- traefik

|

||||

networks:

|

||||

traefik:

|

||||

external: true

|

||||

|

||||

|

||||

|

||||

```

|

||||

|

||||

In part one of this series I will be going over some of the basics of Traefik and how dynamic routing works. If you want to skip to the good stuff and get everything configured with Cloudflare, you can skip to [part 2](https://wordpress.hackanooga.com/traefik-with-lets-encrypt-and-cloudflare-pt-2/).

|

||||

|

||||

This example set’s up the primary Traefik container which acts as the ingress controller as well as a handy One Time Secret sharing [service](https://github.com/Luzifer/ots) I use. Traefik handles routing in Docker via labels. For this to work properly the services that Traefik is trying to route to all need to be on the same Docker network. For this example we created a network called traefik by running the following:

|

||||

|

||||

```

|

||||

docker network create traefik

|

||||

|

||||

```

|

||||

|

||||

Let’s take a look at the labels we applied to the `ots` container a little closer:

|

||||

|

||||

```

|

||||

labels:

|

||||

- traefik.enable=true

|

||||

- traefik.http.routers.ots.rule=Host(`ots.example.com`)

|

||||

- traefik.http.routers.ots.entrypoints=websecure

|

||||

- traefik.http.routers.ots.tls=true

|

||||

- traefik.http.routers.ots.tls.certresolver=letsencrypt

|

||||

- traefik.http.services.ots.loadbalancer.server.port=3000

|

||||

```

|

||||

|

||||

`traefik.enable=true` – This should be pretty self explanatory but it tells Traefik that we want it to know about this service.

|

||||

|

||||

`traefik.http.routers.ots.rule=Host('ots.example.com') - This is where some of the magic comes in.` Here we are defining a router called `ots`. The name is arbitrary in that it doesn’t have to match the name of the service but for our example it does. There are many rules that you can specify but the easiest for this example is host. Basically we are saying that any request coming in for ots.example.com should be picked up by this router. You can find more options for routers in the Traefik [docs](https://doc.traefik.io/traefik/routing/routers/).

|

||||

|

||||

– traefik.http.routers.ots.entrypoints=websecure

|

||||

– traefik.http.routers.ots.tls=true

|

||||

– traefik.http.routers.ots.tls.certresolver=letsencrypt

|

||||

|

||||

We are using these three labels to tell our router that we want it to use the websecure entrypoint, and that it should use the letsencrypt certresolver to grab it’s certificates. websecure is an arbitrary name that we assigned to our :443 interface. There are multiple ways to configure this, I choose to use the cli format in my traefik config:

|

||||

|

||||

“`

|

||||

|

||||

```

|

||||

command:

|

||||

- --api.insecure=true

|

||||

- --providers.docker=true

|

||||

# Our entrypoint names are arbitrary but these are convention.

|

||||

# The important part is the port binding that we associate.

|

||||

- --entrypoints.web.address=:80

|

||||

- --entrypoints.websecure.address=:443

|

||||

|

||||

|

||||

```

|

||||

|

||||

These last label is optional depending on your setup but it is important to understand as the documentation is a little fuzzy.

|

||||

|

||||

– traefik.http.services.ots.loadbalancer.server.port=3000

|

||||

|

||||

Here’s how it works. Suppose you have a container that exposes multiple ports. Maybe one of those is a web ui and another is something that you don’t want exposed. By default Traefik will try and guess which port to route requests to. My understanding is that it will try and use the first exposed port. However you can override this functionality by using the label above which will tell Traefik specifically which port you want to route to inside the container.

|

||||

|

||||

The service name is derived automatically from the definition in the docker compose file:

|

||||

|

||||

```

|

||||

<br></br> ots: # This will become the service name<br></br> image: luzifer/ots<br></br> container_name: ots<br></br>

|

||||

```

|

||||

@ -0,0 +1,125 @@

|

||||

---

|

||||

author: mikeconrad

|

||||

categories:

|

||||

- Automation

|

||||

- Cloudflare

|

||||

- Docker

|

||||

- Traefik

|

||||

dark_fusion_page_sidebar:

|

||||

- sidebar-1

|

||||

dark_fusion_site_layout:

|

||||

- ""

|

||||

date: "2024-02-15T15:19:12Z"

|

||||

guid: https://wordpress.hackanooga.com/?p=425

|

||||

id: 425

|

||||

tags:

|

||||

- Blog Post

|

||||

title: Traefik with Let’s Encrypt and Cloudflare (pt 2)

|

||||

url: /traefik-with-lets-encrypt-and-cloudflare-pt-2/

|

||||

---

|

||||

|

||||

In this article we are gonna get into setting up Traefik to request dynamic certs from Lets Encrypt. I had a few issues getting this up and running and the documentation is a little fuzzy. In my case I decided to go with the DNS challenge route. Really the only reason I went with this option is because I was having issues with the TLS and HTTP challenges. Well as it turns out my issues didn’t have as much to do with my configuration as they did with my router.

|

||||

|

||||

Sometime in the past I had set up some special rules on my router to force all clients on my network to send DNS requests through a self hosted DNS server. I did this to keep some of my “smart” devices from misbehaving by blocking there access to the outside world. As it turns out some devices will ignore the DNS servers that you hand out via DHCP and will use their own instead. That is of course unless you force DNS redirection but that is another post for another day.

|

||||

|

||||

Let’s revisit our current configuration:

|

||||

|

||||

```

|

||||

version: '3'

|

||||

|

||||

services:

|

||||

reverse-proxy:

|

||||

# The official v2 Traefik docker image

|

||||

image: traefik:v2.11

|

||||

# Enables the web UI and tells Traefik to listen to docker

|

||||

command:

|

||||

- --api.insecure=true

|

||||

- --providers.docker=true

|

||||

- --providers.file.filename=/config.yml

|

||||

- --entrypoints.web.address=:80

|

||||

- --entrypoints.websecure.address=:443

|

||||

# Set up LetsEncrypt

|

||||

- --certificatesresolvers.letsencrypt.acme.dnschallenge=true

|

||||

- --certificatesresolvers.letsencrypt.acme.dnschallenge.provider=cloudflare

|

||||

- --certificatesresolvers.letsencrypt.acme.email=mikeconrad@onmail.com

|

||||

- --certificatesresolvers.letsencrypt.acme.storage=/letsencrypt/acme.json

|

||||

- --entryPoints.web.http.redirections.entryPoint.to=websecure

|

||||

- --entryPoints.web.http.redirections.entryPoint.scheme=https

|

||||

- --entryPoints.web.http.redirections.entrypoint.permanent=true

|

||||

- --log=true

|

||||

- --log.level=INFO

|

||||

# - '--certificatesresolvers.letsencrypt.acme.caserver=https://acme-staging-v02.api.letsencrypt.org/directory'

|

||||

|

||||

environment:

|

||||

- CF_DNS_API_TOKEN=${CF_DNS_API_TOKEN}

|

||||

ports:

|

||||

# The HTTP port

|

||||

- "80:80"

|

||||

- "443:443"

|

||||

# The Web UI (enabled by --api.insecure=true)

|

||||

- "8080:8080"

|

||||

volumes:

|

||||

# So that Traefik can listen to the Docker events

|

||||

- /var/run/docker.sock:/var/run/docker.sock:ro

|

||||

- ./letsencrypt:/letsencrypt

|

||||

- ./volumes/traefik/logs:/logs

|

||||

- ./traefik/config.yml:/config.yml:ro

|

||||

networks:

|

||||

- traefik

|

||||

ots:

|

||||

image: luzifer/ots

|

||||

container_name: ots

|

||||

restart: always

|

||||

environment:

|

||||

# Optional, see "Customization" in README

|

||||

#CUSTOMIZE: '/etc/ots/customize.yaml'

|

||||

# See README for details

|

||||

REDIS_URL: redis://redis:6379/0

|

||||

# 168h = 1w

|

||||

SECRET_EXPIRY: "604800"

|

||||

# "mem" or "redis" (See README)

|

||||

STORAGE_TYPE: redis

|

||||

depends_on:

|

||||

- redis

|

||||

labels:

|

||||

- traefik.enable=true

|

||||

- traefik.http.routers.ots.rule=Host(`ots.hackanooga.com`)

|

||||

- traefik.http.routers.ots.entrypoints=websecure

|

||||

- traefik.http.routers.ots.tls=true

|

||||

- traefik.http.routers.ots.tls.certresolver=letsencrypt

|

||||

networks:

|

||||

- traefik

|

||||

redis:

|

||||

image: redis:alpine

|

||||

restart: always

|

||||

volumes:

|

||||

- ./redis-data:/data

|

||||

networks:

|

||||

- traefik

|

||||

networks:

|

||||

traefik:

|

||||

external: true

|

||||

|

||||

|

||||

```

|

||||

|

||||

Now that we have all of this in place there are a couple more things we need to do on the Cloudflare side:

|

||||

|

||||

### Step 1: Setup wildcard DNS entry

|

||||

|

||||

This is pretty straightforward. Follow the Cloudflare [documentation](https://developers.cloudflare.com/dns/manage-dns-records/reference/wildcard-dns-records/) if you aren’t familiar with setting this up.

|

||||

|

||||

### Step 2: Create API Token

|

||||

|

||||

This is where the Traefik documentation is a little lacking. I had some issues getting this set up initially but ultimately found this [documentation](https://go-acme.github.io/lego/dns/cloudflare/) which pointed me in the right direction. In your Cloudflare account you will need to create an API token. Navigate to the dashboard, go to your profile -> API Tokens and create new token. It should have the following permissions:

|

||||

|

||||

```

|

||||

Zone.Zone.Read

|

||||

Zone.DNS.Edit

|

||||

```

|

||||

|

||||

<figure class="wp-block-image size-full"></figure>Also be sure to give it permission to access all zones in your account. Now simply provide that token when starting up the stack and you should be good to go:

|

||||

|

||||

```

|

||||

CF_DNS_API_TOKEN=[redacted] docker compose up -d

|

||||

```

|

||||

@ -0,0 +1,54 @@

|

||||

---

|

||||

author: mikeconrad

|

||||

categories:

|

||||

- Automation

|

||||

- Docker

|

||||

- OCI

|

||||

- Self Hosted

|

||||

dark_fusion_page_sidebar:

|

||||

- sidebar-1

|

||||

dark_fusion_site_layout:

|

||||

- ""

|

||||

date: "2024-03-07T10:07:07Z"

|

||||

guid: https://wordpress.hackanooga.com/?p=413

|

||||

id: 413

|

||||

tags:

|

||||

- Blog Post

|

||||

title: Self hosted package registries with Gitea

|

||||

url: /self-hosted-package-registries-with-gitea/

|

||||

---

|

||||

|

||||

I am a big proponent of open source technologies. I have been using [Gitea](https://about.gitea.com/) for a couple years now in my homelab. A few years ago I moved most of my code off of Github and onto my self hosted instance. I recently came across a really handy feature that I didn’t know Gitea had and was pleasantly surprised by: [Package Registry](https://docs.gitea.com/usage/packages/overview?_highlight=packag). You are no doubt familiar with what a package registry is in the broad context. Here are some examples of package registries you probably use on a regular basis:

|

||||

|

||||

- npm

|

||||

- cargo

|

||||

- docker

|

||||

- composer

|

||||

- nuget

|

||||

- helm

|

||||

|

||||

There are a number of reasons why you would want to self host a registry. For example, in my home lab I have some `Docker` images that are specific to my use cases and I don’t necessarily want them on a public registry. I’m also not concerned about losing the artifacts as I can easily recreate them from code. Gitea makes this really easy to setup, in fact it comes baked in with the installation. For the sake of this post I will just assume that you already have Gitea installed and setup.

|

||||

|

||||

Since the package registry is baked in and enabled by default, I will demonstrate how easy it is to push a docker image. We will pull the default `alpine` image, re-tag it and push it to our internal registry:

|

||||

|

||||

```

|

||||

# Pull the official Alpine image

|

||||

docker pull alpine:latest

|

||||

|

||||

# Re tag the image with our local registry information

|

||||

docker tag alpine:latest git.hackanooga.com/mikeconrad/alpine:latest

|

||||

|

||||

# Login using your gitea user account

|

||||

docker login git.hackanooga.com

|

||||

|

||||

# Push the image to our registry

|

||||

docker push git.hackanooga.com/mikeconrad/alpine:latest

|

||||

|

||||

|

||||

```

|

||||

|

||||

Now log into your Gitea instance, navigate to your user account and look for `packages`. You should see the newly uploaded alpine image.

|

||||

|

||||

<figure class="wp-block-image size-large"></figure>You can see that the package type is container. Clicking on it will give you more information:

|

||||

|

||||

<figure class="wp-block-image size-large"></figure>

|

||||

@ -0,0 +1,197 @@

|

||||

---

|

||||

author: mikeconrad

|

||||

categories:

|

||||

- Ansible

|

||||

- Automation

|

||||

- CI/CD

|

||||

- TeamCity

|

||||

dark_fusion_page_sidebar:

|

||||

- sidebar-1

|

||||

dark_fusion_site_layout:

|

||||

- ""

|

||||

date: "2024-03-11T09:37:47Z"

|

||||

guid: https://wordpress.hackanooga.com/?p=393

|

||||

id: 393

|

||||

tags:

|

||||

- Blog Post

|

||||

title: Automating CI/CD with TeamCity and Ansible

|

||||

url: /automating-ci-cd-with-teamcity-ansible/

|

||||

---

|

||||

|

||||

In part one of this series we are going to explore a CI/CD option you may not be familiar with but should definitely be on your radar. I used Jetbrains TeamCity for several months at my last company and really enjoyed my time with it. A couple of the things I like most about it are:

|

||||

|

||||

- Ability to declare global variables and have them be passed down to all projects

|

||||

- Ability to declare variables that are made up of other variables

|

||||

|

||||

I like to use private or self hosted Docker registries for a lot of my projects and one of the pain points I have had with some other solutions (well mostly Bitbucket) is that they don’t integrate well with these private registries and when I run into a situation where I am pushing an image to or pulling an image from a private registry it get’s a little messy. TeamCity is nice in that I can add a connection to my private registry in my root project and them simply add that as a build feature to any projects that may need it. Essentially, now I only have one place where I have to keep those credentials and manage that connection.

|

||||

|

||||

Another reason I love it is the fact that you can create really powerful build templates that you can reuse. This became very powerful when we were trying to standardize our build processes. For example, most of the apps we build are `.NET` backends and `React` frontends. We built docker images for every project and pushed them to our private registry. TeamCity gave us the ability to standardize the naming convention and really streamline the build process. Enough about that though, the rest of this series will assume that you are using TeamCity. This post will focus on getting up and running using Ansible.

|

||||

|

||||

---

|

||||

|

||||

## Installation and Setup

|

||||

|

||||

For this I will assume that you already have Ansible on your machine and that you will be installing TeamCity locally. You can simply follow along with the installation guide [here](https://www.jetbrains.com/help/teamcity/install-teamcity-server-on-linux-or-macos.html#Example%3A+Installation+using+Ubuntu+Linux). We will be creating an Ansible playbook based on the following steps. If you just want the finished code, you can find it on my Gitea instance [here](https://git.hackanooga.com/mikeconrad/teamcity-ansible-scripts.git):

|

||||

|

||||

#### Step 1 : Create project and initial playbook

|

||||

|

||||

To get started go ahead and create a new directory to hold our configuration:

|

||||

|

||||

```

|

||||

mkdir ~/projects/teamcity-configuration-ansible

|

||||

touch install-teamcity-server.yml

|

||||

```

|

||||

|

||||

Now open up `install-teamcity-server.yml` and add a task to install Java 17 as it is a prerequisite. You will need sudo for this task. \*\*\*As of this writing TeamCity does not support Java 18 or 19. If you try to install one of these you will get an error when trying to start TeamCity.

|

||||

|

||||

```

|

||||

---

|

||||

- name: Install Teamcity

|

||||

hosts: localhost

|

||||

become: true

|

||||

become_user: sudo

|

||||

|

||||

# Add some variables to make our lives easier

|

||||

vars:

|

||||

java_version: "17"

|

||||

teamcity:

|

||||

installation_path: /opt/TeamCity

|

||||

version: "2023.11.4"

|

||||

|

||||

tasks:

|

||||

- name: Install Java

|

||||

ansible.builtin.apt:

|

||||

name: openjdk-{{ java_version }}-jre-headless

|

||||

update_cache: yes

|

||||

state: latest

|

||||

install_recommends: no

|

||||

```

|

||||

|

||||

The next step is to create a dedicated user account. Add the following task to `install-teamcity-server.yml`

|

||||

|

||||

```

|

||||

- name: Add Teamcity User

|

||||

ansible.builtin.user:

|

||||

name: teamcity

|

||||

```

|

||||

|

||||

Next we will need to download the latest version of TeamCity. 2023.11.4 is the latest as of this writing. Add the following task to your `install-teamcity-server.yml`

|

||||

|

||||

```

|

||||

- name: Download TeamCity Server

|

||||

ansible.builtin.get_url:

|

||||

url: https://download.jetbrains.com/teamcity/TeamCity-{{teamcity.version}}.tar.gz

|

||||

dest: /opt/TeamCity-{{teamcity.version}}.tar.gz

|

||||

mode: '0770'

|

||||

|

||||

```

|

||||

|

||||

Now to install TeamCity Server add the following:

|

||||

|

||||

```

|

||||

- name: Install TeamCity Server

|

||||

ansible.builtin.shell: |

|

||||

tar xfz /opt/TeamCity-{{teamcity.version}}.tar.gz

|

||||

rm -rf /opt/TeamCity-{{teamcity.version}}.tar.gz

|

||||

args:

|

||||

chdir: /opt

|

||||

```

|

||||

|

||||

Now that we have everything set up and installed we want to make sure that our new `teamcity` user has access to everything they need to get up and running. We will add the following lines:

|

||||

|

||||

```

|

||||

- name: Update permissions

|

||||

ansible.builtin.shell: chown -R teamcity:teamcity /opt/TeamCity

|

||||

```

|

||||

|

||||

This gives us a pretty nice setup. We have TeamCity server installed with a dedicated user account. The last thing we will do is create a `systemd` service so that we can easily start/stop the server. For this we will need to add a few things.

|

||||

|

||||

1. A service file that tells our system how to manage TeamCity

|

||||

2. A j2 template file that is used to create this service file

|

||||

3. A handler that tells the system to run `systemctl daemon-reload` once the service has been installed.

|

||||

|

||||

Go ahead and create a new templates folder with the following `teamcity.service.j2` file

|

||||

|

||||

```

|

||||

[Unit]

|

||||

Description=JetBrains TeamCity

|

||||

Requires=network.target

|

||||

After=syslog.target network.target

|

||||

[Service]

|

||||

Type=forking

|

||||

ExecStart={{teamcity.installation_path}}/bin/runAll.sh start

|

||||

ExecStop={{teamcity.installation_path}}/bin/runAll.sh stop

|

||||

User=teamcity

|

||||

PIDFile={{teamcity.installation_path}}/teamcity.pid

|

||||

Environment="TEAMCITY_PID_FILE_PATH={{teamcity.installation_path}}/teamcity.pid"

|

||||

[Install]

|

||||

WantedBy=multi-user.target

|

||||

```

|

||||

|

||||

Your project should now look like the following:

|

||||

|

||||

```

|

||||

$: ~/projects/teamcity-ansible-terraform

|

||||

.

|

||||

├── install-teamcity-server.yml

|

||||

└── templates

|

||||

└── teamcity.service.j2

|

||||

|

||||

1 directory, 2 files

|

||||

```

|

||||

|

||||

That’s it! Now you should have a fully automated installed of TeamCity Server ready to be deployed wherever you need it. Here is the final playbook file, also you can find the most up to date version in my [repo](https://git.hackanooga.com/mikeconrad/teamcity-ansible-scripts.git):

|

||||

|

||||

```

|

||||

---

|

||||

- name: Install Teamcity

|

||||

hosts: localhost

|

||||

become: true

|

||||

become_method: sudo

|

||||

|

||||

vars:

|

||||

java_version: "17"

|

||||

teamcity:

|

||||

installation_path: /opt/TeamCity

|

||||

version: "2023.11.4"

|

||||

|

||||

tasks:

|

||||

- name: Install Java

|

||||

ansible.builtin.apt:

|

||||

name: openjdk-{{ java_version }}-jdk # This is important because TeamCity will fail to start if we try to use 18 or 19

|

||||

update_cache: yes

|

||||

state: latest

|

||||

install_recommends: no

|

||||

|

||||

- name: Add TeamCity User

|

||||

ansible.builtin.user:

|

||||

name: teamcity

|

||||

|

||||

- name: Download TeamCity Server

|

||||

ansible.builtin.get_url:

|

||||

url: https://download.jetbrains.com/teamcity/TeamCity-{{teamcity.version}}.tar.gz

|

||||

dest: /opt/TeamCity-{{teamcity.version}}.tar.gz

|

||||

mode: '0770'

|

||||

|

||||

- name: Install TeamCity Server

|

||||

ansible.builtin.shell: |

|

||||

tar xfz /opt/TeamCity-{{teamcity.version}}.tar.gz

|

||||

rm -rf /opt/TeamCity-{{teamcity.version}}.tar.gz

|

||||

args:

|

||||

chdir: /opt

|

||||

|

||||

- name: Update permissions

|

||||

ansible.builtin.shell: chown -R teamcity:teamcity /opt/TeamCity

|

||||

|

||||

- name: TeamCity | Create environment file

|

||||

template: src=teamcity.service.j2 dest=/etc/systemd/system/teamcityserver.service

|

||||

notify:

|

||||

- reload systemctl

|

||||

- name: TeamCity | Start teamcity

|

||||

service: name=teamcityserver.service state=started enabled=yes

|

||||

|

||||

# Trigger a reload of systemctl after the service file has been created.

|

||||

handlers:

|

||||

- name: reload systemctl

|

||||

command: systemctl daemon-reload

|

||||

```

|

||||

@ -0,0 +1,23 @@

|

||||

---

|

||||

author: mikeconrad

|

||||

categories:

|

||||

- Automation

|

||||

- Docker

|

||||

- Software Engineering

|

||||

date: "2024-04-03T09:12:41Z"

|

||||

guid: https://hackanooga.com/?p=557

|

||||

id: 557

|

||||

image: /wp-content/uploads/2024/04/docker-logo-blue-min.png

|

||||

tags:

|

||||

- Blog Post

|

||||

title: Stop all running containers with Docker

|

||||

url: /stop-all-running-containers-with-docker/

|

||||

---

|

||||

|

||||

These are some handy snippets I use on a regular basis when managing containers. I have one server in particular that can sometimes end up with 50 to 100 orphaned containers for various reasons. The easiest/quickest way to stop all of them is to do something like this:

|

||||

|

||||

```

|

||||

docker container stop $(docker container ps -q)

|

||||

```

|

||||

|

||||

Let me break this down in case you are not familiar with the syntax. Basically we are passing the output of `docker container ps -q` into docker container stop. This works because the stop command can take a list of container ids which is what we get when passing the `-q` flag to docker container ps.

|

||||

@ -0,0 +1,162 @@

|

||||

---

|

||||

author: mikeconrad

|

||||

categories:

|

||||

- Ansible

|

||||

- Automation

|

||||

- Docker

|

||||

- Software Engineering

|

||||

- Traefik

|

||||

date: "2024-05-11T09:44:01Z"

|

||||

guid: https://hackanooga.com/?p=564

|

||||

id: 564

|

||||

tags:

|

||||

- Blog Post

|

||||

title: Traefik 3.0 service discovery in Docker Swarm mode

|

||||

url: /traefik-3-0-service-discovery-in-docker-swarm-mode/

|

||||

---

|

||||

|

||||

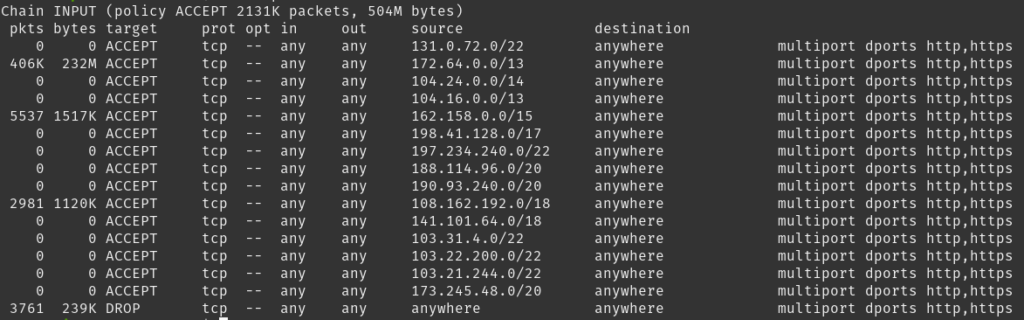

I recently decided to set up a Docker swarm cluster for a project I was working on. If you aren’t familiar with Swarm mode, it is similar in some ways to k8s but with much less complexity and it is built into Docker. If you are looking for a fairly straightforward way to deploy containers across a number of nodes without all the overhead of k8s it can be a good choice, however it isn’t a very popular or widespread solution these days.

|

||||

|

||||

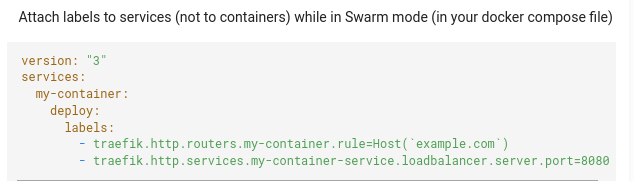

Anyway, I set up a VM scaling set in Azure with 10 Ubuntu 22.04 vms and wrote some Ansible scripts to automate the process of installing Docker on each machine as well as setting 3 up as swarm managers and the other 7 as worker nodes. I ssh’d into the primary manager node and created a docker compose file for launching an observability stack.

|

||||

|

||||

Here is what that `docker-compose.yml` looks like:

|

||||

|

||||

```

|

||||

---

|

||||

services:

|

||||

otel-collector:

|

||||

image: otel/opentelemetry-collector-contrib:0.88.0

|

||||

volumes:

|

||||

- /home/user/repo/common/devops/observability/otel-config.yaml:/etc/otel/config.yaml

|

||||

- /home/user/repo/log:/log/otel

|

||||

command: --config /etc/otel/config.yaml

|

||||

environment:

|

||||

JAEGER_ENDPOINT: 'tempo:4317'

|

||||

LOKI_ENDPOINT: 'http://loki:3100/loki/api/v1/push'

|

||||

ports:

|

||||

- '8889:8889' # Prometheus metrics exporter (scrape endpoint)

|

||||

- '13133:13133' # health_check extension

|

||||

- '55679:55679' # ZPages extension

|

||||

deploy:

|

||||

placement:

|

||||

constraints:

|

||||

- node.hostname==dockerswa2V8BY4

|

||||

networks:

|

||||

- traefik

|

||||

prometheus:

|

||||

container_name: prometheus

|

||||

image: prom/prometheus:v2.42.0

|

||||

volumes:

|

||||

- /home/user/repo/common/devops/observability/prometheus.yml:/etc/prometheus/prometheus.yml

|

||||

ports:

|

||||

- '9090:9090'

|

||||

deploy:

|

||||

placement:

|

||||

constraints:

|

||||

- node.hostname==dockerswa2V8BY4

|

||||

networks:

|

||||

- traefik

|

||||

loki:

|

||||

container_name: loki

|

||||

image: grafana/loki:2.7.4

|

||||

ports:

|

||||

- '3100:3100'

|

||||

networks:

|

||||

- traefik

|

||||

grafana:

|

||||

container_name: grafana

|

||||

image: grafana/grafana:9.4.3

|

||||

volumes:

|

||||

- /home/user/repo/common/devops/observability/grafana-datasources.yml:/etc/grafana/provisioning/datasources/datasources.yml

|

||||

environment:

|

||||

GF_AUTH_ANONYMOUS_ENABLED: 'false'

|

||||

GF_AUTH_ANONYMOUS_ORG_ROLE: 'Admin'

|

||||

expose:

|

||||

- '3000'

|

||||

labels:

|

||||

- traefik.constraint-label=traefik

|

||||

- traefik.http.middlewares.https-redirect.redirectscheme.scheme=https

|

||||

- traefik.http.middlewares.https-redirect.redirectscheme.permanent=true

|

||||

- traefik.http.routers.grafana-http.rule=Host(`swarm-grafana.mydomain.com`)

|

||||